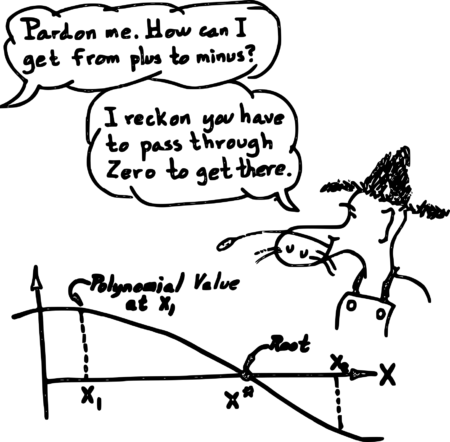

Root Finding

Finding the \(x\) where a scalar function \(f(x) = 0\) is a common problem in numerical programming. There are numerous applications to this. In orbital mechanics, for example, we may want to find the time at which a spacecraft reaches a certain altitude, or enters the Earth's shadow. For these cases, the independent variable is time, and the function \(f\) requires integration of the equations of motion of the spacecraft (for example, by using an IVP solver such as DDEABM). Integrating to an event is often referred to as a "G-stop" feature in these types of applications.

One way to solve this problem is using a derivative-free bracketing method. For these, only function evaluations are used, and the algorithm begins within a set of bounds (\([x_a, x_b]\)) that bracket the root (i.e., \(sign~f(x_a) \ne sign~f(x_b)\)). Over at the FORTRAN 77 Netlib graveyard, the classic zeroin root finding method can be found that implements such an algorithm, namely Brent's method by Australian mathematician Richard Brent from 1971.

In my roots-fortran library, I have modern Fortran implementations of this method and many others, as well as a lot of benchmark test cases. The library can be compiled for single (real32), double (real64), or quad (real128) precision reals.

For real64 numbers and convergence tolerance \(\epsilon\) = 1.0e-15, the following table lists the number of function evaluations for various methods to converge to the root for various test functions:

| \(f(x)\) | \([x_a, x_b]\) | \(x_{root}\) | BS | PE | ITP | TM | BR | AB | MU | CH |

|---|---|---|---|---|---|---|---|---|---|---|

| \((x - 1) e^{-x}\) | \([0,1.5]\) | 1.0 | 46 | 10 | 11 | 9 | 11 | 10 | 9 | 9 |

| \(\cos x - x\) | \([0,1.7]\) | 0.7390851332151607 | 47 | 8 | 9 | 8 | 8 | 8 | 7 | 8 |

| \(e^{x^2 + 7x - 30} - 1\) | \([2.6,3.5]\) | 3.0 | 44 | 20 | 17 | 18 | 12 | 31 | 11 | 11 |

| \(x^3\) | \([-0.5,1/3]\) | 0.0 | 17 | 50 | 16 | 46 | 40 | 38 | 61 | 17 |

| \(x^5\) | \([-0.5,1/3]\) | 0.0 | 11 | 53 | 12 | 20 | 19 | 39 | 35 | 11 |

| \(\cos x - x^3\) | \([0,4]\) | 0.8654740331016144 | 48 | 13 | 11 | 13 | 14 | 15 | 10 | 11 |

| \(x^3 - 1\) | \([0.51,1.5]\) | 1.0 | 46 | 10 | 11 | 9 | 10 | 9 | 7 | 8 |

| \(x^2 ( x^2/3 + \sqrt{2} \sin x ) - \sqrt{3/18}\) | \([0.1,1]\) | 0.3994222917109682 | 47 | 12 | 17 | 10 | 12 | 12 | 9 | 10 |

| \(x^3 + 1\) | \([-1.8,0]\) | -1.0 | 47 | 10 | 10 | 11 | 10 | 12 | 9 | 9 |

| \(\sin((x-7.14)^3)\) | \([7,8]\) | 7.14 | 18 | 51 | 20 | 48 | 46 | 38 | 52 | 18 |

| \(e^{(x-3)^5} - 1\) | \([2.6,3.6]\) | 3.0 | 11 | 55 | 12 | 24 | 26 | 41 | 29 | 11 |

| \(e^{(x-3)^5} - e^{x-1}\) | \([4,5]\) | 4.267168304542125 | 44 | 53 | 45 | 11 | 14 | 58 | 20 | 14 |

| \(\pi - 1/x\) | \([0.05,5]\) | \(1 / \pi\) | 50 | 14 | 49 | 18 | 12 | 5 | 13 | 13 |

| \(4 - \tan x\) | \([0,1.5]\) | 1.325817663668033 | 46 | 14 | 47 | 15 | 13 | 12 | 16 | 15 |

| \(2 x e^{-5} - 2 e^{-5x} + 1\) | \([0,10]\) | 0.1382571550568241 | 52 | 13 | 16 | 13 | 13 | 11 | 13 | 13 |

The methods are: BS = Bisection, PE = Pegasus, ITP, TM = TOMS 748, BR = Brent, AB = Anderson Bjorck, MU = Muller, and CH = Chandrupatla.

There seems to be cottage industry of creating slight variations of this type of method. There are any number of recent papers on this topic, all claiming some improvement. In reality, a lot of the newer methods really aren't great. An obvious trick is a choose functions where your method behaves well. Another is to only compare against very simple methods (such as bisection or regula falsi, which are known to be inefficient). Finally, the most devious trick is to list the number of iterations of the method, rather than the number of function evaluations, which is basically a useless comparison that obscures the real cost for methods that have multiple function evaluations per iteration.

The Brent method is still one of the best performers. The Chandrupatla method from 1997 is also quite good. For the full set of test cases, see the roots-fortran git repo.

Minimization methods

A related type of algorithm are derivative-free minimization methods. The FMIN function at Netlib is based on Brent's original localmin algorithm (modern version here). The PRIMA library implements modern versions of M.J.D. Powell's derivative-free minimizers for multidimensional functions.

See also

- R. P. Brent, "An algorithm with guaranteed convergence for finding a zero of a function", The Computer Journal, Vol 14, No. 4., 1971.

- R. P. Brent, "Algorithms for Minimization Without Derivatives", Prentice-Hall, 1973.

- Gregory W. Chicares, Brent's method outperforms TOMS 748 [Let me illustrate...]

- T. R. Chandrupatla, "A new hybrid quadratic/bisection algorithm for finding the zero of a nonlinear function without derivatives", Advances in Engineering Software, Vol 28, 1997, pp. 145-149.

- M. J. D. Powell, "The BOBYQA algorithm for bound constrained optimization without derivatives," Department of Applied Mathematics and Theoretical Physics, Cambridge England, NA2009/06, 2009.

- polyroots-fortran -- Another modern Fortran library, specifically for finding the roots of polynomials.